Sony reports quarterly earning results with a nice increase in its image sensors sales and outlook:

"We have upwardly revised our sales forecast by 20 billion yen due to an increase in our forecast for unit sales of image sensors for mobile products, compared with the August forecast. Primarily due to this increase in sales, we have upwardly revised our forecast for operating income by 20 billion yen to 150 billion yen."

Sony specifically emphasizes the automotive image sensor it has introduced recently:

Tuesday, October 31, 2017

Monday, October 30, 2017

TowerJazz and Yuanchen Microelectronics Partner for BSI Manufacturing in China

GlobeNewsWire: TowerJazz partners with Changchun Changguang Yuanchen Microelectronics Technology Inc. (YCM), a BSI process manufacturer based in Changchun, China to provide the BSI processing for CMOS sensor wafers manufactured by TowerJazz. This is the first time BSI will be offered by a foundry to the high-end photography market, including large formats requiring stitching.

The partnership will allow TowerJazz to serve its customers with BSI technology in mass production, at competitive prices, starting in the middle of 2018. The new BSI technology is aimed to high-end photography, automotive, AR/VR, and other CIS markets. YCM provides the BSI processing for both 200mm and 300mm CIS wafers.

The new partnership also provides the roadmap for wafer stacking, including state-of-the-art pixel level wafer stacking.

"TowerJazz is recognized worldwide as the leader of CMOS image sensor manufacturing platforms for high-end applications," said Dabing Li, YCM CEO. "The collaboration with TowerJazz will certainly allow us to bring unique and high value technology to the market quickly and in high volume, especially to the growing Chinese market where TowerJazz already plays a significant role."

"I am thrilled with the capabilities we developed with YCM, supporting our continued leadership in many different high-end growing markets. In addition, the excellent collaboration with YCM enables us further penetration into this very fast growing high-end CMOS camera market in China," said Avi Strum, SVP and GM, CMOS Image Sensor Business Unit. "I have very high confidence in the technical capabilities of this partnership."

The partnership will allow TowerJazz to serve its customers with BSI technology in mass production, at competitive prices, starting in the middle of 2018. The new BSI technology is aimed to high-end photography, automotive, AR/VR, and other CIS markets. YCM provides the BSI processing for both 200mm and 300mm CIS wafers.

The new partnership also provides the roadmap for wafer stacking, including state-of-the-art pixel level wafer stacking.

"TowerJazz is recognized worldwide as the leader of CMOS image sensor manufacturing platforms for high-end applications," said Dabing Li, YCM CEO. "The collaboration with TowerJazz will certainly allow us to bring unique and high value technology to the market quickly and in high volume, especially to the growing Chinese market where TowerJazz already plays a significant role."

"I am thrilled with the capabilities we developed with YCM, supporting our continued leadership in many different high-end growing markets. In addition, the excellent collaboration with YCM enables us further penetration into this very fast growing high-end CMOS camera market in China," said Avi Strum, SVP and GM, CMOS Image Sensor Business Unit. "I have very high confidence in the technical capabilities of this partnership."

Chronoptics ToF Pixel Response Measurement

New Zealand-based Chronoptics releases PixelScope instrument measuring pixel timing response parameters:

Sunday, October 29, 2017

Mobileye CTO on Autonomous Cars Safety

Mobileye CTO Amnon Shashua talks about autonomous car sensing and processing of the data to ensure the safety at 2017 World Knowledge Forum in Seoul, South Korea. The talk does not directly refer to the imaging but rather presents the way to overcome the processing of the huge amount of imaging data over 30 billion of miles while still ensuring the safety of autonomous driving:

Saturday, October 28, 2017

Samsung on Structured Light Camera Outdoor Performance

Samsung publishes a paper "Outdoor Operation of Structured Light in Mobile Phone" by Byeonghoon Park, Yongchan Keh, Donghi Lee, Yongkwan Kim, Sungsoon Kim, Kisuk Sung, Jungkee Lee, Donghoon Jang, Youngkwon Yoon presented at The IEEE International Conference on Computer Vision (ICCV), 2017.

"Active Depth Camera does not operate well especially outdoors because signal light is much weaker than ambient sunlight. To overcome this problem, Spectro-Temporal Light Filtering, adopting a light source of 940nm wavelength, has been designed. In order to check and improve outdoor depth quality, mobile phones for proof-of-concept have been implemented with structured light depth camera enclosed. We present its outdoor performance, featuring a 940nm vertical cavity surface emitting laser as a light source and an image sensor with a global shutter to reduce ambient light noise. The result makes us confident that this functionality enables mobile camera technology to step into another stunning stage."

Mantis Vision Coded Light algorithm was used in this work. The company publishes a video on its technology:

Meanwhile, SINTEF Digital publishes an Optics Express paper "Design tool for TOF and SL based 3D cameras" by Gregory Bouquet, Jostein Thorstensen, Kari Anne Hestnes Bakke, and Peter Risholm. A structured light camera excels at short range, such as Apple Face ID, while a ToF camera is a better fit for long range applications, such as Google Tango:

"Active Depth Camera does not operate well especially outdoors because signal light is much weaker than ambient sunlight. To overcome this problem, Spectro-Temporal Light Filtering, adopting a light source of 940nm wavelength, has been designed. In order to check and improve outdoor depth quality, mobile phones for proof-of-concept have been implemented with structured light depth camera enclosed. We present its outdoor performance, featuring a 940nm vertical cavity surface emitting laser as a light source and an image sensor with a global shutter to reduce ambient light noise. The result makes us confident that this functionality enables mobile camera technology to step into another stunning stage."

Mantis Vision Coded Light algorithm was used in this work. The company publishes a video on its technology:

Meanwhile, SINTEF Digital publishes an Optics Express paper "Design tool for TOF and SL based 3D cameras" by Gregory Bouquet, Jostein Thorstensen, Kari Anne Hestnes Bakke, and Peter Risholm. A structured light camera excels at short range, such as Apple Face ID, while a ToF camera is a better fit for long range applications, such as Google Tango:

Friday, October 27, 2017

Bloomberg: Apple Relaxes Face ID Projector Tolerances

Bloomberg publishes some info about iPhone X depth camera production difficulties:

"The 3-D sensor has three key elements: a dot projector, flood illuminator and infrared camera. The flood illuminator beams infrared light, which the camera uses to establish the presence of a face. The projector then flashes 30,000 dots onto the face which the phone uses to decide whether to unlock the home screen. The system uses a two-stage process because the dot projector makes big computational demands and would rapidly drain the battery if activated as frequently as the flood illuminator.

The dot projector is at the heart of Apple’s production problems. Precision is key. If the microscopic components are off by even several microns, a fraction of a hair’s breadth, the technology might not work properly, according to people with knowledge of the situation.

The fragility of the components created problems for LG Innotek Co. and Sharp Corp., both of which struggled to combine the laser and lens to make dot projectors. To boost the number of usable dot projectors and accelerate production, Apple relaxed some of the specifications for Face ID, according to a person with knowledge of the process. As a result, it took less time to test completed modules, one of the major sticking points, the person said.

To make matters worse, Apple lost one of its laser suppliers early on. Finisar Corp. failed to meet Apple’s specifications in time for the start of production. That left Apple reliant on fewer laser suppliers: Lumentum Holdings Inc. and II-VI Inc."

"The 3-D sensor has three key elements: a dot projector, flood illuminator and infrared camera. The flood illuminator beams infrared light, which the camera uses to establish the presence of a face. The projector then flashes 30,000 dots onto the face which the phone uses to decide whether to unlock the home screen. The system uses a two-stage process because the dot projector makes big computational demands and would rapidly drain the battery if activated as frequently as the flood illuminator.

The dot projector is at the heart of Apple’s production problems. Precision is key. If the microscopic components are off by even several microns, a fraction of a hair’s breadth, the technology might not work properly, according to people with knowledge of the situation.

The fragility of the components created problems for LG Innotek Co. and Sharp Corp., both of which struggled to combine the laser and lens to make dot projectors. To boost the number of usable dot projectors and accelerate production, Apple relaxed some of the specifications for Face ID, according to a person with knowledge of the process. As a result, it took less time to test completed modules, one of the major sticking points, the person said.

To make matters worse, Apple lost one of its laser suppliers early on. Finisar Corp. failed to meet Apple’s specifications in time for the start of production. That left Apple reliant on fewer laser suppliers: Lumentum Holdings Inc. and II-VI Inc."

ON Semi Improves NIR Sensitivity of Security Sensor

BusinessWire: ON Semi AR0239 1080p90 CMOS sensor with 3μm BSI pixels uses an improved NIR process, includes DR-Pix technology and gives a 21% improvement in responsivity and a 10% improvement in QE versus the device’s predecessor.

The new sensor delivers 1080p video at 90fps or two- or three-exposure 1080p HDR video at up to 30fps. Engineering samples are available now with full production planned for November 2017.

The new sensor delivers 1080p video at 90fps or two- or three-exposure 1080p HDR video at up to 30fps. Engineering samples are available now with full production planned for November 2017.

Thursday, October 26, 2017

ST Reports Imaging Revenue Growth

SeekingAlpha publishes ST Q3 2017 earnings call transcript. Few quotes on the image sensor business:

"Let's move now to our Imaging Product Division, which we report in Others. Imaging registered very strong sequential revenue growth, reflecting the initial ramp in wireless applications of ST's new program, including the company's Time-of-Flight and new specialized imaging technologies. Other revenues more than doubled in the third quarter.

...we can confirm that based on current visibility, ...the Imaging division anticipate sequential revenues growth from the third into the fourth quarter and anticipate a double-digit year-over-year growth in the fourth quarter in respect to the fourth quarter of the prior year.

...we target the LIDAR device, which are really a key enabling component of the overall smart driving application. And our technology is really a key competitive factor for us to target this kind of LIDAR activity definitively."

"Let's move now to our Imaging Product Division, which we report in Others. Imaging registered very strong sequential revenue growth, reflecting the initial ramp in wireless applications of ST's new program, including the company's Time-of-Flight and new specialized imaging technologies. Other revenues more than doubled in the third quarter.

...we can confirm that based on current visibility, ...the Imaging division anticipate sequential revenues growth from the third into the fourth quarter and anticipate a double-digit year-over-year growth in the fourth quarter in respect to the fourth quarter of the prior year.

...we target the LIDAR device, which are really a key enabling component of the overall smart driving application. And our technology is really a key competitive factor for us to target this kind of LIDAR activity definitively."

Image Sensor Papers at IEDM 2017

IEDM 2017, to be held on Dec. 2-6 in San Francisco, publishes its agenda. Here is the list of image sensor related materials, starting from 3 Sony papers:

3.2 Pixel/DRAM/logic 3-layer stacked CMOS image sensor technology (Invited)

H. Tsugawa, H. Takahashi, R. Nakamura, T. Umebayashi, T. Ogita, H. Okano, K. Iwase, H. Kawashima, T. Yamasaki*, D. Yoneyama*, J. Hashizume, T. Nakajima, K. Murata, Y. Kanaishi, K. Ikeda*, K. Tatani, T. Nagano, H. Nakayama*, T. Haruta and T. Nomoto, Sony Semiconductor

We developed a CMOS image sensor (CIS) chip, which is stacked pixel/DRAM/logic. In this CIS chip, three Si substrates are bonded together, and each substrate is electrically connected by two-stacked through-silica vias (TSVs) through the CIS or dynamic random access memory (DRAM). We obtained low resistance, low leakage current, and high reliability characteristics of these TSVs. Connecting metal with TSVs through DRAM can be used as low resistance wiring for a power supply. The Si substrate of the DRAM can be thinned to 3 μm, and its memory retention and operation characteristics are sufficient for specifications after thinning. With this stacked CIS chip, it is possible to achieve less rolling shutter distortion and produce super slow motion video.

16.4 Near-infrared Sensitivity Enhancement of a Back-illuminated Complementary Metal Oxide Semiconductor Image Sensor with a Pyramid Surface for Diffraction Structure

I. Oshiyama, S. Yokogawa, H. Ikeda, Y. Ebiko, T. Hirano, S. Saito, T. Oinoue, Y. Hagimoto, H. Iwamoto, Sony Semiconductor

We demonstrated the near-infrared (NIR) sensitivity enhancement of back-illuminated complementary metal oxide semiconductor image sensors (BI-CIS) with a pyramid surface for diffraction (PSD) structures on crystalline silicon and deep trench isolation (DTI). The incident light diffracted on the PSD because of the strong diffraction within the substrate, resulting in a quantum efficiency of more than 30% at 850 nm. By using a special treatment process and DTI structures, without increasing the dark current, the amount of crosstalk to adjacent pixels was decreased, providing resolution equal to that of a flat structure. Testing of the prototype devices revealed that we succeeded in developing unique BI-CIS with high NIR sensitivity.

16.1 An Experimental CMOS Photon Detector with 0.5e- RMS Temporal Noise and 15µm pitch Active Sensor Pixels

T. Nishihara, M. Matsumura, T. Imoto, K. Okumura, Y. Sakano, Y. Yorikado, Y. Tashiro, H. Wakabayashi, Y. Oike and Y. Nitta, Sony Semiconductor

This is the first reported non-electron-multiplying CMOS Image Sensor (CIS) photon-detector for replacing Photo Multiplier Tubes (PMT). 15µm pitch active sensor pixels with complete charge transfer and readout noise of 0.5 e- RMS are arrayed and their digital outputs are summed to detect micro light pulses. Successful proof of radiation counting is demonstrated.

8.6 High-Performance, Flexible Graphene/Ultra-thin Silicon Ultra-Violet Image Sensor

A. Ali, K. Shehzad, H. Guo, Z. Wang*, P. Wang*, A. Qadir, W. Hu*, T. Ren**, B. Yu*** and Y. Xu, Zhejiang University

*Chinese Academy of Sciences, **Tsinghua University, ***State University of New York

We report a high-performance graphene/ultra-thin silicon metal-semiconductor-metal ultraviolet (UV) photodetector, which benefits from the mechanical flexibility and high-percentage visible light rejection of ultra-thin silicon. The proposed UV photodetector exhibits high photo-responsivity, fast time response, high specific detectivity, and UV/Vis rejection ratio of about 100, comparable to the state-of-the-art Schottky photodetectors

16.2 SOI monolithic pixel technology for radiation image sensor (Invited)

Y. Arai, T. Miyoshi and I. Kurachi, High Energy Accelerator Research Organization (KEK)

SOI pixel technology is developed to realize monolithic radiation imaging device. Issues of the back-gate effect, coupling between sensors and circuits, and the TID effect have been solved by introducing a middle Si layer. A small pixel size is achieved by using the PMOS and NMOS active merge technique.

16.3 Back-side Illuminated GeSn Photodiode Array on Quartz Substrate Fabricated by Laser-induced Liquidphase Crystallization for Monolithically-integrated NIR Imager Chip

H. Oka, K. Inoue, T. T. Nguyen*, S. Kuroki*, T. Hosoi, T. Shimura and H. Watanabe, Osaka University, *Hiroshima University

Back-side illuminated single-crystalline GeSn photodiode array has been demonstrated on a quartz substrate for group-IVbased NIR imager chip. Owing to high crystalline quality of GeSn array formed by laser-induced liquid-phase crystallization technique, significantly enhanced NIR photoresponse with high responsivity of 1.3 A/W was achieved operated under backside illumination

16.5 Industrialised SPAD in 40 nm Technology

S. Pellegrini, B. Rae, A. Pingault, D. Golanski*, S. Jouan*, C. Lapeyre** and B. Mamdy*, STMicroelectronics,*TR&D, *CEA-Leti, Minatec

We present the first mature SPAD device in advanced 40 nm technology with dedicated microlenses. A high fill factor >70% is reported with a low DCR median of 50cps at room temperature and a high PDP of 5% at 840nm. This digital node is portable to a 3D stacked technology.

16.6 A Back-Illuminated 3D-Stacked Single-Photon Avalanche Diode in 45nm CMOS Technology

M.-J. Lee, A. R. Ximenes, P. Padmanabhan, T. J. Wang*, K. C. Huang*, Y. Yamashita*, D. N. Yaung* and E. Charbon

Ecole Polytechnique Fédérale de Lausanne (EPFL), *Taiwan Semiconductor Manufacturing Company (TSMC)

We report on the world's first back-illuminated 3D-stacked single-photon avalanche diode (SPAD) in 45nm CMOS technology. This SPAD achieves a dark count rate of 55.4cps/µm2, a maximum photon detection probability of 31.8% at 600nm, over 5% in the 420-920nm wavelength range, and timing jitter of 107.7ps at 2.5V excess bias voltage and room temperature. To the best of our knowledge, these are the best results ever reported for any back-illuminated 3D-stacked SPAD technology.

26.2 High-yield passive Si photodiode array towards optical neural recording

D. Mao, J. Morley, Z. Zhang, M. Donnelly, and G. Xu, *University of Massachusetts Amherst

We demonstrate a high yield, passive Si photodiode array, aiming to establish a miniaturized optical recording device for in-vivo use. Our fabricated array features high yield (>90%), high sensitivity (down to 32 μW/cm2), high speed (1000 frame per second by scanning over up to 100 pixels), and sub-10uW power.

3.2 Pixel/DRAM/logic 3-layer stacked CMOS image sensor technology (Invited)

H. Tsugawa, H. Takahashi, R. Nakamura, T. Umebayashi, T. Ogita, H. Okano, K. Iwase, H. Kawashima, T. Yamasaki*, D. Yoneyama*, J. Hashizume, T. Nakajima, K. Murata, Y. Kanaishi, K. Ikeda*, K. Tatani, T. Nagano, H. Nakayama*, T. Haruta and T. Nomoto, Sony Semiconductor

We developed a CMOS image sensor (CIS) chip, which is stacked pixel/DRAM/logic. In this CIS chip, three Si substrates are bonded together, and each substrate is electrically connected by two-stacked through-silica vias (TSVs) through the CIS or dynamic random access memory (DRAM). We obtained low resistance, low leakage current, and high reliability characteristics of these TSVs. Connecting metal with TSVs through DRAM can be used as low resistance wiring for a power supply. The Si substrate of the DRAM can be thinned to 3 μm, and its memory retention and operation characteristics are sufficient for specifications after thinning. With this stacked CIS chip, it is possible to achieve less rolling shutter distortion and produce super slow motion video.

16.4 Near-infrared Sensitivity Enhancement of a Back-illuminated Complementary Metal Oxide Semiconductor Image Sensor with a Pyramid Surface for Diffraction Structure

I. Oshiyama, S. Yokogawa, H. Ikeda, Y. Ebiko, T. Hirano, S. Saito, T. Oinoue, Y. Hagimoto, H. Iwamoto, Sony Semiconductor

We demonstrated the near-infrared (NIR) sensitivity enhancement of back-illuminated complementary metal oxide semiconductor image sensors (BI-CIS) with a pyramid surface for diffraction (PSD) structures on crystalline silicon and deep trench isolation (DTI). The incident light diffracted on the PSD because of the strong diffraction within the substrate, resulting in a quantum efficiency of more than 30% at 850 nm. By using a special treatment process and DTI structures, without increasing the dark current, the amount of crosstalk to adjacent pixels was decreased, providing resolution equal to that of a flat structure. Testing of the prototype devices revealed that we succeeded in developing unique BI-CIS with high NIR sensitivity.

16.1 An Experimental CMOS Photon Detector with 0.5e- RMS Temporal Noise and 15µm pitch Active Sensor Pixels

T. Nishihara, M. Matsumura, T. Imoto, K. Okumura, Y. Sakano, Y. Yorikado, Y. Tashiro, H. Wakabayashi, Y. Oike and Y. Nitta, Sony Semiconductor

This is the first reported non-electron-multiplying CMOS Image Sensor (CIS) photon-detector for replacing Photo Multiplier Tubes (PMT). 15µm pitch active sensor pixels with complete charge transfer and readout noise of 0.5 e- RMS are arrayed and their digital outputs are summed to detect micro light pulses. Successful proof of radiation counting is demonstrated.

8.6 High-Performance, Flexible Graphene/Ultra-thin Silicon Ultra-Violet Image Sensor

A. Ali, K. Shehzad, H. Guo, Z. Wang*, P. Wang*, A. Qadir, W. Hu*, T. Ren**, B. Yu*** and Y. Xu, Zhejiang University

*Chinese Academy of Sciences, **Tsinghua University, ***State University of New York

We report a high-performance graphene/ultra-thin silicon metal-semiconductor-metal ultraviolet (UV) photodetector, which benefits from the mechanical flexibility and high-percentage visible light rejection of ultra-thin silicon. The proposed UV photodetector exhibits high photo-responsivity, fast time response, high specific detectivity, and UV/Vis rejection ratio of about 100, comparable to the state-of-the-art Schottky photodetectors

16.2 SOI monolithic pixel technology for radiation image sensor (Invited)

Y. Arai, T. Miyoshi and I. Kurachi, High Energy Accelerator Research Organization (KEK)

SOI pixel technology is developed to realize monolithic radiation imaging device. Issues of the back-gate effect, coupling between sensors and circuits, and the TID effect have been solved by introducing a middle Si layer. A small pixel size is achieved by using the PMOS and NMOS active merge technique.

16.3 Back-side Illuminated GeSn Photodiode Array on Quartz Substrate Fabricated by Laser-induced Liquidphase Crystallization for Monolithically-integrated NIR Imager Chip

H. Oka, K. Inoue, T. T. Nguyen*, S. Kuroki*, T. Hosoi, T. Shimura and H. Watanabe, Osaka University, *Hiroshima University

Back-side illuminated single-crystalline GeSn photodiode array has been demonstrated on a quartz substrate for group-IVbased NIR imager chip. Owing to high crystalline quality of GeSn array formed by laser-induced liquid-phase crystallization technique, significantly enhanced NIR photoresponse with high responsivity of 1.3 A/W was achieved operated under backside illumination

16.5 Industrialised SPAD in 40 nm Technology

S. Pellegrini, B. Rae, A. Pingault, D. Golanski*, S. Jouan*, C. Lapeyre** and B. Mamdy*, STMicroelectronics,*TR&D, *CEA-Leti, Minatec

We present the first mature SPAD device in advanced 40 nm technology with dedicated microlenses. A high fill factor >70% is reported with a low DCR median of 50cps at room temperature and a high PDP of 5% at 840nm. This digital node is portable to a 3D stacked technology.

16.6 A Back-Illuminated 3D-Stacked Single-Photon Avalanche Diode in 45nm CMOS Technology

M.-J. Lee, A. R. Ximenes, P. Padmanabhan, T. J. Wang*, K. C. Huang*, Y. Yamashita*, D. N. Yaung* and E. Charbon

Ecole Polytechnique Fédérale de Lausanne (EPFL), *Taiwan Semiconductor Manufacturing Company (TSMC)

We report on the world's first back-illuminated 3D-stacked single-photon avalanche diode (SPAD) in 45nm CMOS technology. This SPAD achieves a dark count rate of 55.4cps/µm2, a maximum photon detection probability of 31.8% at 600nm, over 5% in the 420-920nm wavelength range, and timing jitter of 107.7ps at 2.5V excess bias voltage and room temperature. To the best of our knowledge, these are the best results ever reported for any back-illuminated 3D-stacked SPAD technology.

26.2 High-yield passive Si photodiode array towards optical neural recording

D. Mao, J. Morley, Z. Zhang, M. Donnelly, and G. Xu, *University of Massachusetts Amherst

We demonstrate a high yield, passive Si photodiode array, aiming to establish a miniaturized optical recording device for in-vivo use. Our fabricated array features high yield (>90%), high sensitivity (down to 32 μW/cm2), high speed (1000 frame per second by scanning over up to 100 pixels), and sub-10uW power.

Microsoft Discontinues Kinect

Alex Kipman, Microsoft 3D vision architect, and Matthew Lapsen, GM of Xbox Devices Marketing told in an interview with Co.Design that Microsoft is discontinuing Kinect ToF device. While the Kinect as a standalone product leaves the market, Microsoft is not abandoning the ToF technology. It's currently being used in Microsoft Hololens, with its new version is being developed. Kinect’s ToF team has been re-targeted to build other Microsoft products, including the Cortana voice assistant, the Windows Hello biometric facial ID system, and a context-aware user interface for the future that Microsoft dubs Gaze, Gesture, and Voice (GGV).

Since its lunch in 2010, Microsoft has sold 35 million Kinect units, making it the best selling 3D camera, until iPhone X sales reach that milestone sometime next year. In the first few years, Kinect was based on Primesense structured light technology. According to AppleInsider, Microsoft sold 24 million of the PrimeSense-powered Kinect-1 by February of 2013, a little over two years after it launched. This leaves 11 million units for Canesta ToF-based Kinect-2 device.

Other interesting info is the power consumption progress of Micosoft 3D camera over the years: 50W in the structured light-based Kinect-1, 25W in ToF Kinect-2, 1.5W in ToF-based Hololens.

Since its lunch in 2010, Microsoft has sold 35 million Kinect units, making it the best selling 3D camera, until iPhone X sales reach that milestone sometime next year. In the first few years, Kinect was based on Primesense structured light technology. According to AppleInsider, Microsoft sold 24 million of the PrimeSense-powered Kinect-1 by February of 2013, a little over two years after it launched. This leaves 11 million units for Canesta ToF-based Kinect-2 device.

Other interesting info is the power consumption progress of Micosoft 3D camera over the years: 50W in the structured light-based Kinect-1, 25W in ToF Kinect-2, 1.5W in ToF-based Hololens.

Wednesday, October 25, 2017

Doogee Demos its 3D Face Unlock

Chinese smartphone maker Doogee publishes a Youtube video demoing 3D face unlock in its Mix 2 smartphone:

ON Semi Hayabusa Sensors Feature Super-Exposure Capability

BusinessWire: ON Semiconductor announces Hayabusa CMOS sensor platform for automotive applications such as ADAS, mirror replacement, rear and surround view systems and autonomous driving. The Hayabusa platform features a 3.0um BSI pixel design that delivers a charge capacity of 100,000e-, said to be highest in the industry, on-chip Super-Exposure capability for HDR with LED flicker mitigation (LFM), and real-time functional safety and automotive grade qualification.

“The Hayabusa family enables automakers to meet the evolving standards for ADAS such as European NCAP 2020, and offer next-generation features such as electronic mirrors and high-resolution surround view systems with anti-flicker technology. The scalable approach of the sensors from ½” to ¼” optical sizes reduces customer development time and effort for multiple car platforms, giving them a time-to-market advantage.” said Ross Jatou, VP and GM of the Automotive Solutions Division at ON Semiconductor. “ON Semiconductor has been shipping image sensors with this pixel architecture in high-end digital cameras for cinematography and television. We are now putting this proven architecture into new sensors developed from the ground up for automotive standards.”

The high charge capacity of this pixel design enables every device in the Hayabusa family to deliver Super-Exposure capability, which results in 120dB HDR images with LFM without sacrificing low-light sensitivity. With the widespread use of LEDs for front and rear lighting as well as traffic signs, the LFM capability of the platform makes certain that pulsed light sources do not appear to flicker, which can lead to driver distraction or, in the case of front facing ADAS, the misinterpretation of a scene by machine vision algorithms.

The first product in this family, the 2.6MP AR0233 CMOS sensor, is capable of running 1080p at 60 fps. Samples are available now to early access customers.

“The Hayabusa family enables automakers to meet the evolving standards for ADAS such as European NCAP 2020, and offer next-generation features such as electronic mirrors and high-resolution surround view systems with anti-flicker technology. The scalable approach of the sensors from ½” to ¼” optical sizes reduces customer development time and effort for multiple car platforms, giving them a time-to-market advantage.” said Ross Jatou, VP and GM of the Automotive Solutions Division at ON Semiconductor. “ON Semiconductor has been shipping image sensors with this pixel architecture in high-end digital cameras for cinematography and television. We are now putting this proven architecture into new sensors developed from the ground up for automotive standards.”

The high charge capacity of this pixel design enables every device in the Hayabusa family to deliver Super-Exposure capability, which results in 120dB HDR images with LFM without sacrificing low-light sensitivity. With the widespread use of LEDs for front and rear lighting as well as traffic signs, the LFM capability of the platform makes certain that pulsed light sources do not appear to flicker, which can lead to driver distraction or, in the case of front facing ADAS, the misinterpretation of a scene by machine vision algorithms.

The first product in this family, the 2.6MP AR0233 CMOS sensor, is capable of running 1080p at 60 fps. Samples are available now to early access customers.

Digitimes: Sony to Allocate More Resources to Automotive Sensors

Digitimes sources say that Sony efforts to penetrate automotive imaging market are to start to bear fruits in 2018:

"Sony is looking to allocate more of its available production capacity for CIS for advanced driver assistance systems (ADAS) and other automotive electronics applications in a move to gradually shift its focus from smartphones and other mobile devices, said the sources. Currently, about half of Sony's CIS capacity is being reserved by the world's first-tier handset vendors.

Mobile devices remain the largest application for CIS, but self-driving vehicles have been identified by CIS suppliers as the "blue ocean" and will overtake mobile devices as the leading application for CIS, the sources indicated. CIS demand for ADAS will be first among all auto electronics segments set to boom starting 2018, the sources said."

"Sony is looking to allocate more of its available production capacity for CIS for advanced driver assistance systems (ADAS) and other automotive electronics applications in a move to gradually shift its focus from smartphones and other mobile devices, said the sources. Currently, about half of Sony's CIS capacity is being reserved by the world's first-tier handset vendors.

Mobile devices remain the largest application for CIS, but self-driving vehicles have been identified by CIS suppliers as the "blue ocean" and will overtake mobile devices as the leading application for CIS, the sources indicated. CIS demand for ADAS will be first among all auto electronics segments set to boom starting 2018, the sources said."

Tuesday, October 24, 2017

News from Australia

BusinessWire: Renesas, Australian Semiconductor Technology Company Pty Ltd (ASTC), and VLAB Works, a subsidiary of ASTC, announce a joint development of the VLAB/IMP-TASimulator virtual platform (VP) for Renesas’ R-Car V3M, an automotive SoC for ADAS and in-vehicle infotainment systems. The VP simulates image recognition and cognitive IPs in the R-Car V3M SoC and realizes embedded software development using a PC only, which enables the VP to shorten development time as well as improve software quality.

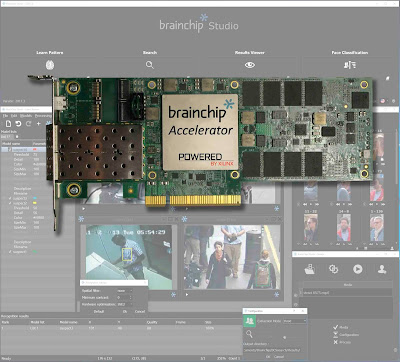

BrainChip Holdings announces that it has shipped its first BrainChip Accelerator card to a major European automobile manufacturer.

As the first commercial implementation of a hardware-accelerated spiking neural network (SNN) system, BrainChip Accelerator will be evaluated for use in ADAS and Autonomous Vehicle applications.

BrainChip Accelerator is said to increase the performance of object recognition provided by BrainChip Studio software and algorithms. The low-power accelerator card can detect, extract and track objects using a proprietary SNN technology. It provides a 7x improvement in images/second/watt, compared to traditional convolutional neural networks accelerated by GPUs.

Bob Beachler, BrainChip’s SVP for Marketing and Business Development, said: “Our spiking neural network provides instantaneous “one-shot” learning, is fast at detecting, extracting and tracking objects, and is very low-power. These are critical attributes for automobile manufacturers processing the large amounts of video required for ADAS and AV applications. We look forward to working with this world-class automobile manufacturer in applying our technology to meet its requirements.”

A few slides from the recent company presentation:

BrainChip Holdings announces that it has shipped its first BrainChip Accelerator card to a major European automobile manufacturer.

As the first commercial implementation of a hardware-accelerated spiking neural network (SNN) system, BrainChip Accelerator will be evaluated for use in ADAS and Autonomous Vehicle applications.

BrainChip Accelerator is said to increase the performance of object recognition provided by BrainChip Studio software and algorithms. The low-power accelerator card can detect, extract and track objects using a proprietary SNN technology. It provides a 7x improvement in images/second/watt, compared to traditional convolutional neural networks accelerated by GPUs.

Bob Beachler, BrainChip’s SVP for Marketing and Business Development, said: “Our spiking neural network provides instantaneous “one-shot” learning, is fast at detecting, extracting and tracking objects, and is very low-power. These are critical attributes for automobile manufacturers processing the large amounts of video required for ADAS and AV applications. We look forward to working with this world-class automobile manufacturer in applying our technology to meet its requirements.”

A few slides from the recent company presentation:

Monday, October 23, 2017

Omnivision Unveils First Nyxel Product for Security Applications

PRNewswire: OmniVision introduces the OS05A20, the first image sensor to implement OmniVision's Nyxel NIR technology. This 5MP color image sensor leverages both the PureCel pixel and Nyxel technology and achieves a significant improvement in QE when compared with OmniVision's earlier-generation sensors. However, no QE numbers have been released so far.

Nyxel technology combines thick-silicon pixel architectures with extended DTI and careful management of wafer surface texture to improve QE up to 3x for 850nm and up to 5x for 940nm, while maintaining all other image-quality metrics.

Nyxel technology combines thick-silicon pixel architectures with extended DTI and careful management of wafer surface texture to improve QE up to 3x for 850nm and up to 5x for 940nm, while maintaining all other image-quality metrics.

Sony Releases Stacked Automotive Sensor Meeting Mobileye Spec

PRNewswire: Sony releases IMX324, a 1/1.7-inch stacked CMOS sensor with 7.42MP resolution and RCCC (Red-Clear-Clear-Clear) color filter for forward-sensing cameras in ADAS. The IMX324 is expected to offer compatibility with the "EyeQ 4" and "EyeQ 5" image processors currently being developed by Mobileye, an Intel Company. Until now now, Mobileye reference designs relied mostly on ON Semi sensors.

Sony will begin shipping samples in November 2017. IMX324 is said to be the industry's first automotive grade stacked image sensor meeting quality standards and functions required for automotive applications.

This image sensor is capable of approximately three times the horizontal resolution of conventional products (IMX224MQV), enabling image capture of objects such as road signs of up to 160m away (with FOV 32° lens). The sensor's pixel binning mode achieves the low lighting sensitivity of 2666 mV. The sensor is equipped with a unique function that captures dark sections at high-sensitivity settings as well as bright sections at high resolution alternatively, enabling high-precision image recognition when combined with post-signal processing.

Sony will begin shipping samples in November 2017. IMX324 is said to be the industry's first automotive grade stacked image sensor meeting quality standards and functions required for automotive applications.

This image sensor is capable of approximately three times the horizontal resolution of conventional products (IMX224MQV), enabling image capture of objects such as road signs of up to 160m away (with FOV 32° lens). The sensor's pixel binning mode achieves the low lighting sensitivity of 2666 mV. The sensor is equipped with a unique function that captures dark sections at high-sensitivity settings as well as bright sections at high resolution alternatively, enabling high-precision image recognition when combined with post-signal processing.

Sunday, October 22, 2017

Smartphone Imaging Market Reports

InstantFlashNews quotes Taiwan-based Isaiah Research on 3D-imaging capable smartphones saying that market keep to be dominated by Apple till 2019 when iPhone is supposed to have 55% out of the total 290M units:

Isaiah Research increases forecast for dual camera phones to 270M units in 2017:

The earlier Isaiah reports from July 2016 gave significantly lower numbers of 170-180M units:

Gartner reports a decrease in the global image sensor revenue from 2015 to 2016: "The CMOS image sensor market declined 1.7% in 2016, mainly because of a price drop and saturation of smartphones, especially high-end smartphones." The top 5 companies accounted for 88.9% of global CIS revenue in 2016 with the top 3 companies have 78.9% of the market:

Isaiah Research increases forecast for dual camera phones to 270M units in 2017:

The earlier Isaiah reports from July 2016 gave significantly lower numbers of 170-180M units:

Gartner reports a decrease in the global image sensor revenue from 2015 to 2016: "The CMOS image sensor market declined 1.7% in 2016, mainly because of a price drop and saturation of smartphones, especially high-end smartphones." The top 5 companies accounted for 88.9% of global CIS revenue in 2016 with the top 3 companies have 78.9% of the market:

3 Layer Color and Polarization Sensitive Imager

A group of researchers from University of Illinois at Urbana-Champaign, Washington University in St. Louis, and University of Cambridge, UK published a paper "Bio-inspired color-polarization imager for real-time in situ imaging" by Missael Garcia, Christopher Edmiston, Radoslav Marinov, Alexander Vail, and Viktor Gruev. The image sensor is said to be inspired by mantis shrimps vision, although it more reminds me quite approach of Foveon:

"Nature has a large repertoire of animals that take advantage of naturally abundant polarization phenomena. Among them, the mantis shrimp possesses one of the most advanced and elegant visual systems nature has developed, capable of high polarization sensitivity and hyperspectral imaging. Here, we demonstrate that by shifting the design paradigm away from the conventional paths adopted in the imaging and vision sensor fields and instead functionally mimicking the visual system of the mantis shrimp, we have developed a single-chip, low-power, high-resolution color-polarization imaging system.

Our bio-inspired imager captures co-registered color and polarization information in real time with high resolution by monolithically integrating nanowire polarization filters with vertically stacked photodetectors. These photodetectors capture three different spectral channels per pixel by exploiting wavelength-dependent depth absorption of photons."

"Our bio-inspired imager comprises 1280 by 720 pixels with a dynamic range of 62 dB and a maximum signal-to-noise ratio of 48 dB. The quantum efficiency is above 30% over the entire visible spectrum, while achieving high polarization extinction ratios of ∼40∼40 on each spectral channel. This technology is enabling underwater imaging studies of marine species, which exploit both color and polarization information, as well as applications in biomedical fields."

A Youtube video shows nice pictures coming out of the camera:

"Nature has a large repertoire of animals that take advantage of naturally abundant polarization phenomena. Among them, the mantis shrimp possesses one of the most advanced and elegant visual systems nature has developed, capable of high polarization sensitivity and hyperspectral imaging. Here, we demonstrate that by shifting the design paradigm away from the conventional paths adopted in the imaging and vision sensor fields and instead functionally mimicking the visual system of the mantis shrimp, we have developed a single-chip, low-power, high-resolution color-polarization imaging system.

Our bio-inspired imager captures co-registered color and polarization information in real time with high resolution by monolithically integrating nanowire polarization filters with vertically stacked photodetectors. These photodetectors capture three different spectral channels per pixel by exploiting wavelength-dependent depth absorption of photons."

"Our bio-inspired imager comprises 1280 by 720 pixels with a dynamic range of 62 dB and a maximum signal-to-noise ratio of 48 dB. The quantum efficiency is above 30% over the entire visible spectrum, while achieving high polarization extinction ratios of ∼40∼40 on each spectral channel. This technology is enabling underwater imaging studies of marine species, which exploit both color and polarization information, as well as applications in biomedical fields."

A Youtube video shows nice pictures coming out of the camera:

Saturday, October 21, 2017

Infineon, Autoliv on Automotive Imaging Market

Infineon publishes an "Automotive Conference Call" presentation dated by Oct. 10, 2017. Few interesting slides showing camera and LiDAR content in cars of the future:

Autoliv CEO presentation dated by Sept. 28, 2017 gives a bright outlook on automotive imaging:

Autoliv CEO presentation dated by Sept. 28, 2017 gives a bright outlook on automotive imaging:

Friday, October 20, 2017

Trinamix Distance Sensing Technology Explained

BASF spin-off Trinamix publishes a nice technology page with Youtube videos explaining its depth sensing principles. They call it "Focus-Induced Photoresponse (FIP):"

"FIP takes advantage of a particular phenomenon in photodetector devices: an irradiance-dependent photoresponse. The photoresponse of these devices depends not only on the amount of light incident, but also on the size of the light spot on the detector. This phenomenon allows to distinguish whether the same amount of light is focused or defocused on the sensor. We call this the “FIP effect” and use it to measure distance.

The picture illustrates how the FIP effect can be utilized for distance measurements. The photocurrent of the photodetector reaches its maximum when the light is in focus and decreases symmetrically outside the focus. A change of the distance between light source and lens results in such a change of the spot size on the sensor. By analyzing the photoresponse, the distance between light source and lens can be deduced."

Trinamix also started production of Hertzstück PbS SWIR photodetectors with PbSe ones to follow:

"FIP takes advantage of a particular phenomenon in photodetector devices: an irradiance-dependent photoresponse. The photoresponse of these devices depends not only on the amount of light incident, but also on the size of the light spot on the detector. This phenomenon allows to distinguish whether the same amount of light is focused or defocused on the sensor. We call this the “FIP effect” and use it to measure distance.

The picture illustrates how the FIP effect can be utilized for distance measurements. The photocurrent of the photodetector reaches its maximum when the light is in focus and decreases symmetrically outside the focus. A change of the distance between light source and lens results in such a change of the spot size on the sensor. By analyzing the photoresponse, the distance between light source and lens can be deduced."

Trinamix also started production of Hertzstück PbS SWIR photodetectors with PbSe ones to follow:

Thursday, October 19, 2017

Invisage Acquired by Apple?

Reportedly, there is some sort of acquisition deal reached between Invisage and Apple. A part of Invisage employees joined Apple. Another part is looking for jobs, apparently. While the deal has never been officially announced, I got unofficial confirmations of it from 3 independent sources.

Update: According to 2 sources, the deal was closed in July this year.

Somewhat old Invisage Youtube videos are still available and show the company's visible-light technology, although Invisage worked on IR sensing in more recent years:

Update #2: There are few more indications that Invisage has been acquired. Nokia Growth Partners (NGP) that participated the 2014 investing round shows Invisage in its exits list:

InterWest Partners too invested in 2014 and now lists Invisage among its non-current investments:

Update: According to 2 sources, the deal was closed in July this year.

Somewhat old Invisage Youtube videos are still available and show the company's visible-light technology, although Invisage worked on IR sensing in more recent years:

Update #2: There are few more indications that Invisage has been acquired. Nokia Growth Partners (NGP) that participated the 2014 investing round shows Invisage in its exits list:

InterWest Partners too invested in 2014 and now lists Invisage among its non-current investments:

Samsung VR Camera Features 17 Imagers

Samsung introduces the 360 Round, a camera for developing and streaming high-quality 3D content for VR experience. The 360 Round uses 17 lenses—eight stereo pairs positioned horizontally and one single lens positioned vertically—to livestream 4K 3D video and spatial audio, and create engaging 3D images with depth.

With such cameras getting widely adopted on the market, it can easily become a major market for image sensors:

With such cameras getting widely adopted on the market, it can easily become a major market for image sensors:

Google Pixel 2 Smartphone Features Stand-Alone HDR+ Processor

Ars Technica reports that Google Pixel 2 smartphone features a separate Google-designed image processor chip, "Pixel Visual Core." It's said "to handle the most challenging imaging and machine learning applications" and that the company is "already preparing the next set of applications" designed for the hardware. The Pixel Visual Core has its own CPU, a low power ARM A53 core, DDR4 RAM, the eight IPU cores, and a PCIe and MIPI interfaces. Google says the company's HDR+ image processing can run "5x faster and at less than 1/10th the energy" than it currently does on the main CPU. The new core will be enabled in the forthcoming Android Oreo 8.1 (MR1) update.

The new IPU cores are intended to use Halide language for image processing and TensorFlow for machine learning. A custom Google-made compiler optimizes the code for the underlying hardware.

Google also publishes an article explaining its HDR+ and portrait mode that the new core is supposed to accelerate. Google also publishes a video explaining the Pixel 2 camera features:

The new IPU cores are intended to use Halide language for image processing and TensorFlow for machine learning. A custom Google-made compiler optimizes the code for the underlying hardware.

Google also publishes an article explaining its HDR+ and portrait mode that the new core is supposed to accelerate. Google also publishes a video explaining the Pixel 2 camera features:

Wednesday, October 18, 2017

Basler Compares Image Sensors for Machine Vision and Industrial Applications

Basler presents EMVA 1288 measurements of the image sensors in its cameras. It's quite interesting to compare CCD with CMOS sensors and Sony with other companies in terms of QE. Qsat, Dark Noise, etc.:

5 Things to Learn from AutoSens 2017

EMVA publishes "AutoSens Show Report: 5 Things We Learned This Year" by Marco Jacobs, VP of Marketing, Videantis. The five important things are:

- The devil is in the detail

Sort of obvious. See some examples in the article. - No one sensor to rule them all

Different image sensors, Lidars, each optimized for a different sub-task - No bold predictions

That is, nobody knows what the autonomous driving arrives to the market - Besides the drive itself, what will an autonomous car really be like?

- Deep learning a must-have tool for everyone

Sort of a common statement although the approaches vary. Some put the intelligence into the sensors, others keep sensors dumb while concentrating the processing in a central unit.

DENSO and Fotonation Collaborate

BusinessWire: DENSO and Xperi-Fotonation start joint technology development of cabin sensing based on image recognition. DENSO expects to significantly improve the performance of its Driver Status Monitor, an active safety product used in tracks since 2014. Improvements of such products also will be used in next-generation passenger vehicles, including a system to help drivers return to driving mode during Level 3 of autonomous drive.

Using FotoNation’s facial image recognition and neural networks technologies, detection accuracy will be increased remarkably by detecting much more features instead of using the conventional detection method based on the relative positions of the eyes, nose, mouth, and other facial regions. Moreover, DENSO will develop new functions, such as those to detect the driver’s gaze direction and facial expressions more accurately, to understand the state of mind of the driver in order to help create more comfortable vehicles.

“Understanding the status of the driver and engaging them at the right time is an important component for enabling the future of autonomous driving,” said Yukihiro Kato, senior executive director, Information & Safety Systems Business Group of DENSO. “I believe this collaboration with Xperi will help accelerate our innovative ADAS product development by bringing together the unique expertise of both our companies.”

“We are excited to partner with DENSO to innovate in such a dynamic field,” said Jon Kirchner, CEO of Xperi Corporation. “This partnership will play a significant role in paving the way to the ultimate goal of safer roadways through use of our imaging and facial analytics technologies and DENSO’s vast experience in the space.”

Using FotoNation’s facial image recognition and neural networks technologies, detection accuracy will be increased remarkably by detecting much more features instead of using the conventional detection method based on the relative positions of the eyes, nose, mouth, and other facial regions. Moreover, DENSO will develop new functions, such as those to detect the driver’s gaze direction and facial expressions more accurately, to understand the state of mind of the driver in order to help create more comfortable vehicles.

Using FotoNation’s facial image recognition and neural networks technologies, detection accuracy will be increased remarkably by detecting much more features instead of using the conventional detection method based on the relative positions of the eyes, nose, mouth, and other facial regions. Moreover, DENSO will develop new functions, such as those to detect the driver’s gaze direction and facial expressions more accurately, to understand the state of mind of the driver in order to help create more comfortable vehicles.

“Understanding the status of the driver and engaging them at the right time is an important component for enabling the future of autonomous driving,” said Yukihiro Kato, senior executive director, Information & Safety Systems Business Group of DENSO. “I believe this collaboration with Xperi will help accelerate our innovative ADAS product development by bringing together the unique expertise of both our companies.”

“We are excited to partner with DENSO to innovate in such a dynamic field,” said Jon Kirchner, CEO of Xperi Corporation. “This partnership will play a significant role in paving the way to the ultimate goal of safer roadways through use of our imaging and facial analytics technologies and DENSO’s vast experience in the space.”

Using FotoNation’s facial image recognition and neural networks technologies, detection accuracy will be increased remarkably by detecting much more features instead of using the conventional detection method based on the relative positions of the eyes, nose, mouth, and other facial regions. Moreover, DENSO will develop new functions, such as those to detect the driver’s gaze direction and facial expressions more accurately, to understand the state of mind of the driver in order to help create more comfortable vehicles.

Tuesday, October 17, 2017

AutoSens 2017 Awards

AutoSens conference held on Sept. 20-21 in Brussels, Belgium publishes its Awards. Some of the image sensor relevant ones:

Most Engaging Content

Hardware Innovation

Most Exciting Start-Up

Most Engaging Content

- First place: Vladimir Koifman, Image Sensors World (yes, this is me!)

- Highly commended: Junko Yoshida, EE Times

Hardware Innovation

- First place: Renesas

- Highly commended: STMicroelectronics

Most Exciting Start-Up

- Winner: Algolux

- Highly commended: Innoviz Technologies

LG, Rockchip and CEVA Partner on 3D Imaging

PRNewswire: CEVA partners with LG to deliver a high-performance, low-cost smart 3D camera for consumer electronics and robotic applications.

The 3D camera module incorporates a Rockchip RK1608 coprocessor with multiple CEVA-XM4 imaging and vision DSPs to perform biometric face authentication, 3D reconstruction, gesture/posture tracking, obstacle detection, AR and VR.

"There is a clear demand for cost-efficient 3D camera sensor modules to enable an enriched user experience for smartphones, AR and VR devices and to provide a robust localization and mapping (SLAM) solution for robots and autonomous cars," said Shin Yun-sup, principal engineer at LG Electronics. "Through our collaboration with CEVA, we are addressing this demand with a fully-featured compact 3D module, offering exceptional performance thanks to our in-house algorithms and the CEVA-XM4 imaging and vision DSP."

The 3D camera module incorporates a Rockchip RK1608 coprocessor with multiple CEVA-XM4 imaging and vision DSPs to perform biometric face authentication, 3D reconstruction, gesture/posture tracking, obstacle detection, AR and VR.

"There is a clear demand for cost-efficient 3D camera sensor modules to enable an enriched user experience for smartphones, AR and VR devices and to provide a robust localization and mapping (SLAM) solution for robots and autonomous cars," said Shin Yun-sup, principal engineer at LG Electronics. "Through our collaboration with CEVA, we are addressing this demand with a fully-featured compact 3D module, offering exceptional performance thanks to our in-house algorithms and the CEVA-XM4 imaging and vision DSP."

Monday, October 16, 2017

Ambarella Loses Key Customers

The Motley Fool publishes an analysis of Ambarella performance over the last year. The company lost some of its key customers GoPro, Hikvision and DJI, while the new Google Clips camera opted for non-Ambarella processor as well:

"Faced with shrinking margins, GoPro needed to buy cheaper chipsets to cut costs. It also wanted a custom design which wasn't readily available to competitors like Ambarella's SoCs. That's why it completely cut Ambarella out of the loop and hired Japanese chipmaker Socionext to create a custom GP1 SoC for its new Hero 6 cameras.

DJI also recently revealed that its portable Spark drone didn't use an Ambarella chipset. Instead, the drone uses the Myriad 2 VPU (visual processing unit) from Intel's Movidius. DJI previously used the Myriad 2 alongside an Ambarella chipset in its flagship Phantom 4, but the Spark uses the Myriad 2 for both computer vision and image processing tasks.

Google also installed the Myriad 2 in its Clips camera, which automatically takes burst shots by learning and recognizing the faces in a user's life.

Ambarella needs the CV1 to catch up to the Myriad 2, but that could be tough with the Myriad's first-mover's advantage and Intel's superior scale.

To top it all off, Chinese chipmakers are putting pressure on Ambarella's security camera business in China."

"Faced with shrinking margins, GoPro needed to buy cheaper chipsets to cut costs. It also wanted a custom design which wasn't readily available to competitors like Ambarella's SoCs. That's why it completely cut Ambarella out of the loop and hired Japanese chipmaker Socionext to create a custom GP1 SoC for its new Hero 6 cameras.

DJI also recently revealed that its portable Spark drone didn't use an Ambarella chipset. Instead, the drone uses the Myriad 2 VPU (visual processing unit) from Intel's Movidius. DJI previously used the Myriad 2 alongside an Ambarella chipset in its flagship Phantom 4, but the Spark uses the Myriad 2 for both computer vision and image processing tasks.

Google also installed the Myriad 2 in its Clips camera, which automatically takes burst shots by learning and recognizing the faces in a user's life.

Ambarella needs the CV1 to catch up to the Myriad 2, but that could be tough with the Myriad's first-mover's advantage and Intel's superior scale.

To top it all off, Chinese chipmakers are putting pressure on Ambarella's security camera business in China."

Pikselim Demos Low-Light Driver Vision Enhancement

Pikselim publishes a night-time Driver Vision Enhancement (DVE) video using its low-light CMOS sensor behind the windshield of the vehicle with the headlights off (sensor is operated in the 640x512 format at 15 fps in the Global Shutter mode, using an f/0.95 optics and off-chip de-noising):

Sunday, October 15, 2017

Yole on Automotive LiDAR Market

Yole Developpement publishes its AutoSens Brussels 2017 presentation "Application, market & technology status of the automotive LIDAR." Few slides form the presentation:

Sony Announces Three New Sensors

Sony added three new sensors to its flyers table: 8.3MP 2um pixel based IMX334LQR and 4.5um global shutter pixel based 2.9MP IMX429LLJ and 2MP IMX430LLJ. The news sensors are said to have high sensitivity and aimed to security and surveillance applications.

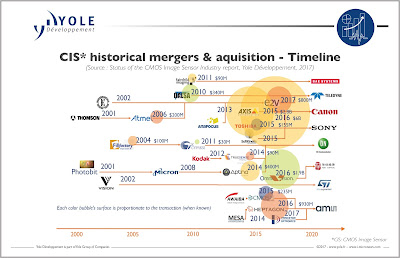

Yole Image Sensors M&A Review

IMVE publishes article "Keeping Up With Consolidation" by Pierre Cambou, Yole Developpement image sensor analyst. There is a nice chart showing the large historical mergers and acquisitions:

"For the source of future M&A, one should rather look toward the decent number of machine vision sensor technology start-ups, companies like Softkinetic, which was purchased by Sony in 2015, and Mesa, which was acquired by Ams, in 2014. There are a certain number of interesting start-ups right now, such as PMD, Chronocam, Fastree3D, SensL, Sionyx, and Invisage. Beyond the start-ups, and from a global perspective, there is little room for a greater number of deals at sensor level, because almost all players have recently been subject to M&A."

"For the source of future M&A, one should rather look toward the decent number of machine vision sensor technology start-ups, companies like Softkinetic, which was purchased by Sony in 2015, and Mesa, which was acquired by Ams, in 2014. There are a certain number of interesting start-ups right now, such as PMD, Chronocam, Fastree3D, SensL, Sionyx, and Invisage. Beyond the start-ups, and from a global perspective, there is little room for a greater number of deals at sensor level, because almost all players have recently been subject to M&A."

Saturday, October 14, 2017

Waymo Self-Driving Car Relies on 5 LiDARs and 1 Surround-View Camera

Alphabet Waymo publishes Safety Report with some details on its self-driving car sensors - 5 LiDARs and one 360-deg color camera:

LiDAR (Laser) System

LiDAR (Light Detection and Ranging) works day and night by beaming out millions of laser pulses per second—in 360 degrees—and measuring how long it takes to reflect off a surface and return to the vehicle. Waymo’s system includes three types of LiDAR developed in-house: a short-range LiDAR that gives our vehicle an uninterrupted view directly around it, a high-resolution mid-range LiDAR, and a powerful new generation long-range LiDAR that can see almost three football fields away.

Vision (Camera) System

Our vision system includes cameras designed to see the world in context, as a human would, but with a simultaneous 360-degree field of view, rather than the 120-degree view of human drivers. Because our high-resolution vision system detects color, it can help our system spot traffic lights, construction zones, school buses, and the flashing lights of emergency vehicles. Waymo’s vision system is comprised of several sets of high-resolution cameras, designed to work well at long range, in daylight and low-light conditions.

Half a year ago, Bloomberg published an animated gif image showing the cleaning of Waymo 360-deg camera:

LiDAR (Laser) System

LiDAR (Light Detection and Ranging) works day and night by beaming out millions of laser pulses per second—in 360 degrees—and measuring how long it takes to reflect off a surface and return to the vehicle. Waymo’s system includes three types of LiDAR developed in-house: a short-range LiDAR that gives our vehicle an uninterrupted view directly around it, a high-resolution mid-range LiDAR, and a powerful new generation long-range LiDAR that can see almost three football fields away.

Vision (Camera) System

Our vision system includes cameras designed to see the world in context, as a human would, but with a simultaneous 360-degree field of view, rather than the 120-degree view of human drivers. Because our high-resolution vision system detects color, it can help our system spot traffic lights, construction zones, school buses, and the flashing lights of emergency vehicles. Waymo’s vision system is comprised of several sets of high-resolution cameras, designed to work well at long range, in daylight and low-light conditions.

Half a year ago, Bloomberg published an animated gif image showing the cleaning of Waymo 360-deg camera:

Subscribe to:

Posts (Atom)